The primary learning experience for BUSN 5000 students at the Terry College of Business involves coding exercises with R. While these can be daunting tasks for business and economics students with little to no coding experience, generative artificial intelligence (AI) tools like ChatGPT have emerged as valuable tutors that can assist in the coding process.

BUSN 5000 is just one of many classes at the University of Georgia being reshaped by the rise of AI. However, these same students might also be facing financial pressures that could lead them to seek risky solutions, such as taking out. These loans can be tempting with their ease of access, but often come with high-interest rates and predatory terms that can worsen a student’s financial situation.

Generative AI tools have exploded in popularity since OpenAI launched ChatGPT in November 2022. Their rapid adoption is already revolutionizing the workplace, but the rise of AI presents a set of unique challenges to colleges and universities around the world.

Why It’s Newsworthy: This article explores how instructors and faculty at UGA are addressing the rise of generative AI, a field that has surged in popularity since ChatGPT launched last year.The new technology could disrupt almost every aspect of university operations including admissions and academic functions. At the forefront of colleges’ weariness to adopt AI are cheating and plagiarism concerns.

Many universities have outright banned the use of AI, while others are embracing the technology and rolling it out at scale. Prior to the start of the Fall 2023 semester, the University of Michigan launched a suite of custom AI tools for students, faculty and staff. U-M is believed to be the first university to offer a custom AI platform to its entire community.

I’ve spent the last three months exploring the adoption of AI at the University of Georgia, where faculty and staff are generally excited about the opportunities that AI creates for learning.

This optimism is matched by skepticism toward the new technology.

UGA’s experience with AI likely models similar trends at other universities across the U.S., and has significant implications for the future of higher education.

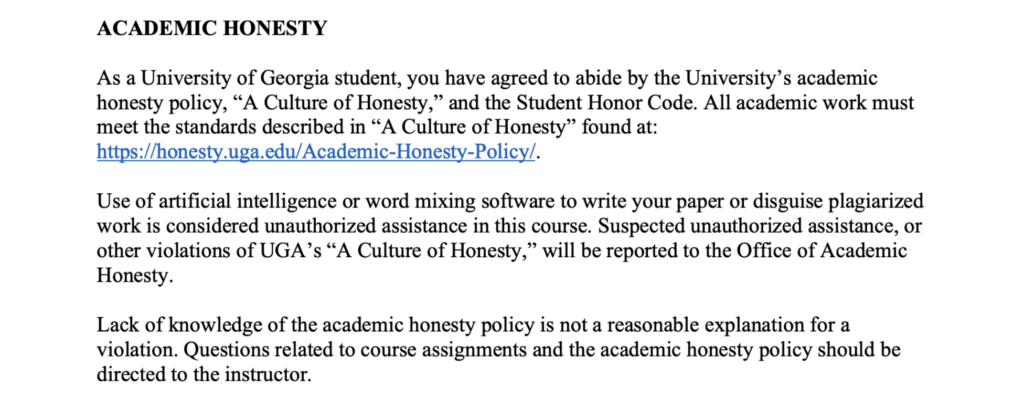

Syllabus Policies

The Center for Teaching and Learning (CTL) is at the center of efforts to judiciously integrate AI technology into the classroom. It released ChatGPT Guidance for Instructors earlier this year to help professors formulate syllabus policies addressing the use of generative AI tools.

“Our general advice has been for instructors to consider the ways AI can help or hinder students in achieving course learning objectives,” CTL Director Megan Mittelstadt said. “[Instructors might] identify the ways student engagement with generative AI would detract or stand in the way of students gaining important course-related skills.”

Mittelstadt says total transparency between students and instructors is key to integrating generative AI into higher education.

“When I’m thinking about this, I think about the ways in which faculty already communicate to their students,” Mittelstadt said. “Considering that degree of transparency that we’re already providing for our students, we also as instructors need to bring to the table an explanation of the ways that we do or do not hope students will interact with generative AI.”

Allison Mawn, a third-year student with a double major in journalism and economics, says her BUSN 5000 instructor Christopher Cornwell is encouraging his students to use AI to help write code.

“It’s definitely been interesting learning more about AI because so many people are so scared about AI, and then here’s Dr. Cornwell, he’s so excited about it all the time,” Mawn said.

Instructors’ expectations vary widely across UGA. Many professors opt for policies that label the use of generative AI as unauthorized assistance, while others actively seek out teaching innovations with tools like ChatGPT.

“What we’ve done in [BUSN 5000] is very aggressively promote the idea that generative AI can be helpful,” Cornwell said.

The underlying responsibility of every instructor is to equip students with the skills they need to be successful in the AI-powered workplaces of the future.

“I think we want our students to have evergreen skills that will ensure that they’re resilient decades down the road,” Mittelstadt said.

AI In The Classroom

Students and instructors at UGA are already finding creative ways to leverage AI in the classroom.

Public Relations Prof. Nicholas Eng utilized ChatGPT for an in-class exercise in which students rewrote an AI-generated press release.

“The goal of my assignment was to have students look at an AI-generated press release and critique it to see the limitations of what AI can do, and how human creativity and human voice are really important in having an effective piece of communication,” Eng said.

Instructors have been encouraged to share their teaching innovations to foster discussions about creative uses for AI. Mittelstadt shared some creative examples with me during our conversation last month.

An English instructor has provided the option for students to use generative AI as a “peer writing reviewer” instead of a human peer. The instructor provides students with prompts that ensure they are soliciting the right sorts of feedback on their writing.

At the Terry College of Business, graduate program faculty are exploring generative AI’s business uses and strengths, while also working to identify its weaknesses. Generative AI is covered briefly in a master’s level audit course, and more in-depth in accounting analytics.

Students are similarly finding innovative ways to enhance their own learning with AI. They had an opportunity to share uses for AI at a panel last Spring.

“We heard so many interesting examples from the students in the room about the ways they are already using generative AI,” Mittelstadt said. “I was so impressed by the creative ways that UGA students are using [it].”

Some students use AI to create personalized lesson or study plans. For example, a student that has two weeks to prepare for an exam could input their schedule and the exam’s content into a generative AI tool. The AI could then construct a two-week study plan to help the student prepare.

Mawn’s BUSN 5000 class primarily utilizes AI as a coding assistant.

“One of the big things we do in the class is coding and programming with R, so one thing he’s really been encouraging us to do is use [AI] to help us write the code,” Mawn said.

Generative AI can additionally be used as a study partner that can test students with personalized multiple choice quizzes. It’s also capable of rewriting complicated materials to a different degree of understanding.

“[Cornwell] even calls it our tutor,” Mawn said. “It’s something he very much is trying to keep us up to date on and be like ‘Hey, this is a thing that’s going to be following y’all for the rest of forever so you might want to take advantage of it.’”

Academic Integrity

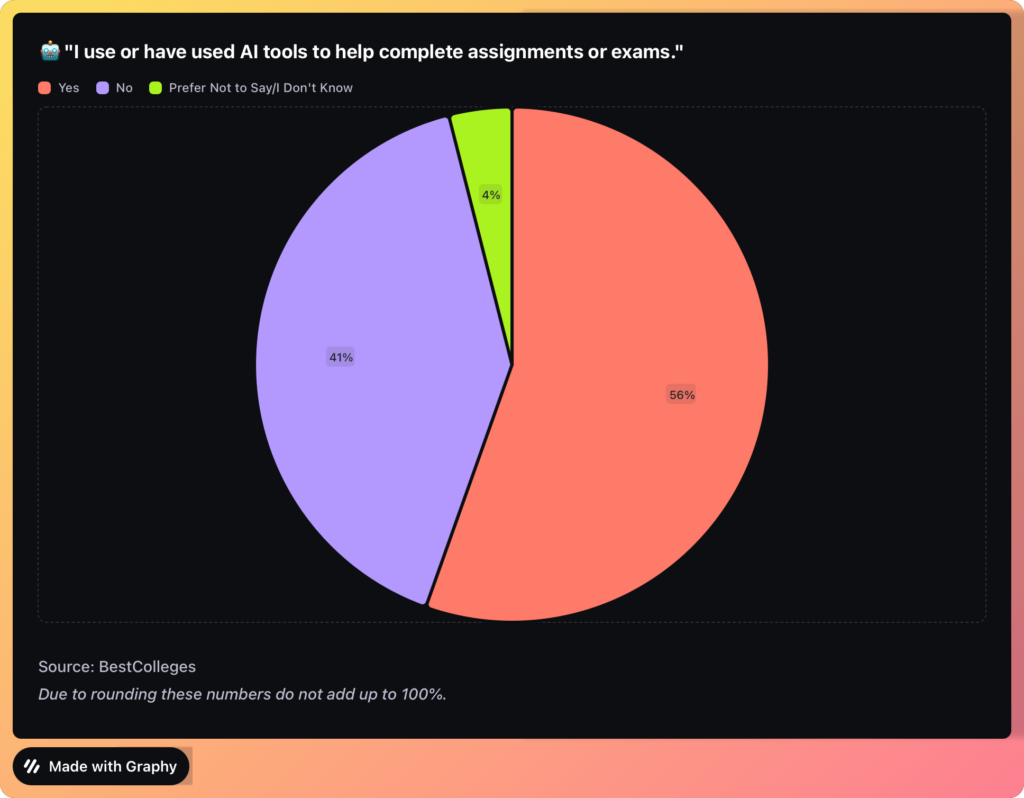

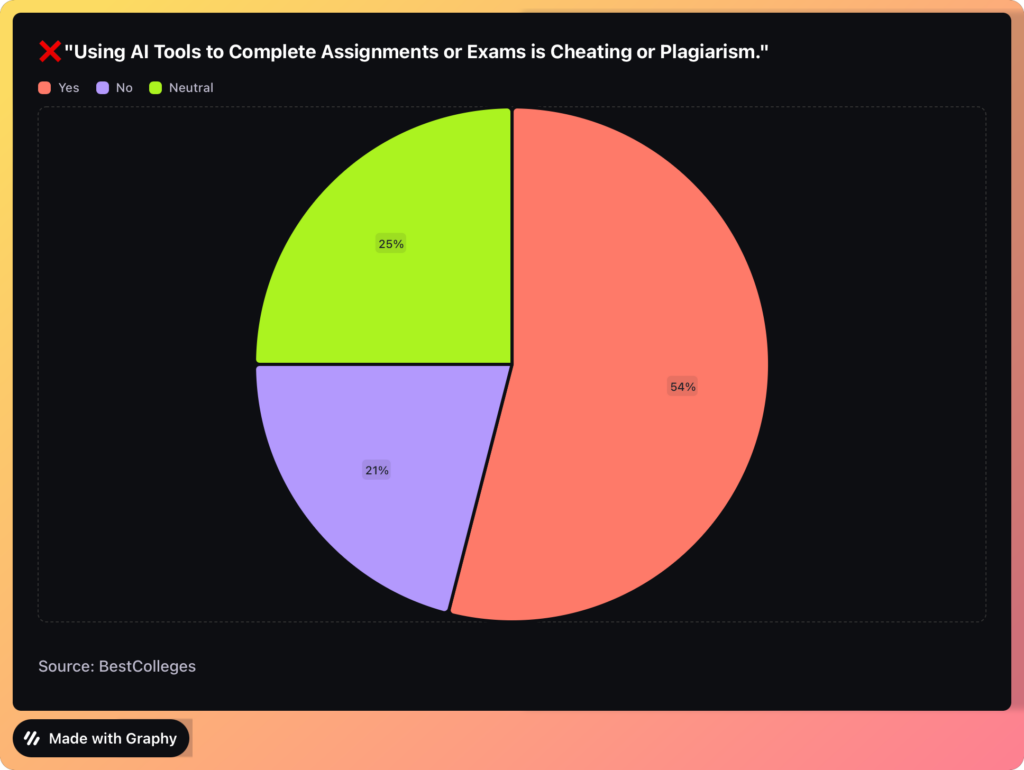

A leading concern among instructors and faculty is that generative AI tools could be used to cheat or plagiarize.

“I would say that it is a concern that has come up when I’m grading, because in my class right now every single week I have my students write a reflective essay,” Eng said. “Sometimes I do think and wonder if generative AI is being used.”

While instructors have been forced to address their expectations with students, many still opt for lenient approaches that trust students to adhere to UGA’s academic honesty policy.

“In my syllabus, I’m very clear that students aren’t supposed to use generative AI to help them complete their assignments,” Eng said. “I could check, but I trust that my students are adults who have integrity and will uphold standards of making sure their work is their own.”

Cornwell says concerns about AI-related academic dishonesty are often “fruitless.”

“If you take the next step of trying to monitor the use of GPT in writing assignments, you believe that there is some software out there that can decode it, I think you’re barking up the wrong tree,” Cornwell said.

It’s true that cracking down on AI-generated assignments is essentially impossible today. Most research on AI detection suggests that AI content detectors can only achieve an average accuracy of about 60%, although paid tools can reach roughly 80% accuracy. This is still insufficient in academic settings.

Cornwell adds that students who rely on AI too heavily only hinder their own ability to master new skills.

“If you think productive is [using GPT to provide code] and pasting that into the assignment, that’s fine, I basically tell them that’s fine,” Cornwell said. “But that’s also you deciding that you’ll be happy to have GPT be a substitute for you, and you will lose.”

A Look Ahead

Institutions of higher learning will spend the next few years wrestling with questions about AI. The rapidly-evolving technology has the potential to revolutionize education, but also raises serious concerns about data privacy, cheating and the dehumanization of learning.

The responsibility ultimately lies with students to use AI to assist in their learning without allowing it to replace them.

“The thing we talk about in class all the time is that you need to learn how to complement AI, or have it complement you so it doesn’t substitute for you,” Cornwell said.

What’s Working

-

Can We Build Less Biased Medical Bots?

Melalogic is an app that provides skin health resources to people with dark skin. The uses crowdsourced imaging data to confront racial bias in diagnostic artificial intelligence. Users can access the Black Skin Resource Center with information on 14 issues that affect people with darker skin. The app also shares health posters that physicians use to share information with their patients and videos with dermatologic tips. In the works is Melalogic 2.0, an immersive telehealth experience.

Discussions about AI’s place in higher education will likely be intensified by the technology’s continued evolution.

“This field is moving so quickly, all technology is moving quickly,” Mittelstadt said. “We’re already seeing ways that professionals are using generative AI tools in the field, and that’s only going to change as the technology changes.”

Although consumer-facing AI tools are still relatively new, it’s reasonable to assume that adoption will only increase as more advanced tools are released. Only time will tell what the future holds for AI-powered learning.

“For homework we watched a video from 2018 about a talk given at Google about the future of AI,” Mawn said. “It’s so interesting even five years later to see what has come true, what’s already been surpassed and what we’re still on track to make happen in the future.”

Jack Shields is a senior majoring in journalism.

Show Comments (0)