Assuming you’ve used the internet before, you may have heard some of the buzz about quantum computing. For instance, you may have heard that it’ll change the world as we know it, that it will drastically impact an array of industries, and maybe even that it will cause a “technological apocalypse” if and when it comes to fruition. The idealized quantum computer has the power to perform calculations and analyze data that even the most powerful classical computer– the regular computers we have– humans could build would take thousands of years to perform.

There are currently 98 organizations working on the technology involved in this highly competitive, heavily-invested in race towards quantum computing– including between 20 and 30 big tech companies such as Google, Microsoft and IBM– according to the Quantum Computing Report.

Many of these companies currently have functioning quantum computers, but they are highly specialized and only able to run very specific kinds of calculations. While these companies pour resources into optimizing these computers to make them useful for more practical applications– a reality we are expected to see in 10 years or so– some independent researchers, such as Dr. Michael Geller at the University of Georgia, are thinking even further ahead.

Before we talk about how researchers like Geller are working to further revolutionize quantum computing, we first need to understand what quantum computing is, and what exactly is at stake in all of this.

The Power of the Very Small

In order to understand what makes quantum computers so powerful, we need to dive into a very strange world, in which the laws of physics we are familiar with no longer apply. At the subatomic scale of quantum mechanics, the laws that govern the behavior of small particles tend to become more mysterious the more we try to understand them.

Quantum mechanics is mysterious in the sense that physicists can use its laws to make predictions, but have yet to understand why many of these laws work the way they do.

Beyond its inherent weirdness, the laws of quantum mechanics that physicists have been able to describe can be difficult to grasp without a background studying the field.

Quantum mechanics pioneer Richard Feynman, after he won the 1965 Nobel Prize in Physics, said “Hell, if I could explain it to the average person, it wouldn’t have been worth the Nobel Prize.”

Fortunately, we don’t need to have a Feynman-level understanding of this stuff to see what’s so cool about quantum technology.

Quantum computers operate by taking advantage of the unusual laws of quantum mechanics and controlling the behavior of fundamental particles– the supposed building blocks of our universe and the smallest pieces you can get before you can’t break them down any further– in a way that is very different from how classical computers work.

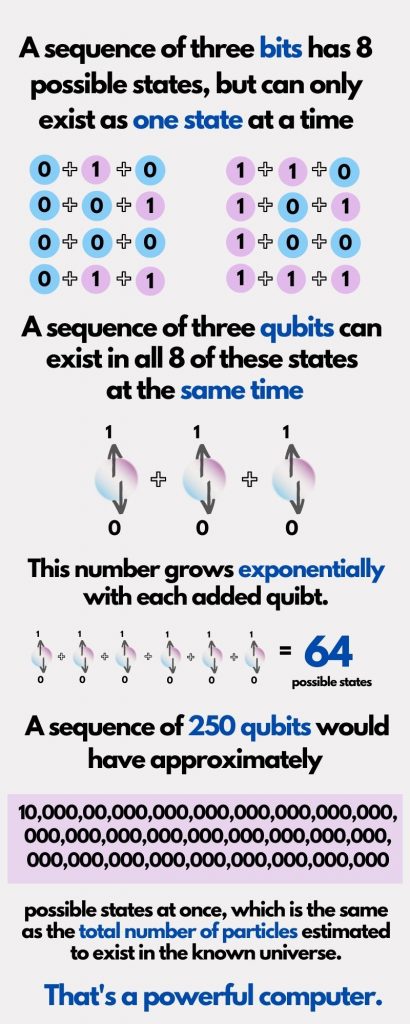

Classical computers carry information in the form of bits, short for binary digits, which can be programmed to represent a single binary value, either 0 or 1.

Bits can be stored as a digital device or other physical system that has two distinct states, such as two positions of an electrical switch, two distinct voltage levels allowed by a circuit, or two directions of magnetization.

In most modern computers, bits are often stored as electrical circuits that can be switched to either “on” or “off”, causing them to represent either a 0 or 1.

Everything your computer does, from searching for cat videos to analyzing spreadsheets, it does by running sequences of bits.

Your computer can only process a certain number of bits at one time, and is therefore very limited in computational capacity. But what if it could contain all possible combinations of 0’s and 1’s at once, and manipulate their state to run unimaginably complex calculations at the drop of a hat?

Introducing quantum bits, or qubits (pronounced cue-bits). Rather than being in a state of either 0 or 1, qubits can actually exist in a state of being both at the same time, what physicists call a superposition. Welcome to the unintuitive world of quantum mechanics, where you’ll find a cat that is both dead and alive until you check on it, and particles that are simultaneously in all possible states of being at once until they interact with their environment.

While Schrödinger’s cat is just a metaphor, what it implies for fundamental particles is very real.

Quantum computers can use any fundamental particle as a qubit, but most of them use electrons or photons. Since each particle can represent both a 0 and 1 at the same time, you can shove a lot more processing power into the computer than if you had to have a different set of bits for each possible combination of 0’s and 1’s.

To understand the scale of this power, imagine you have a coin that can be heads or tails. This coin therefore has two possible states. If you had three coins, there would be eight possible states. (If you’re curious, the equation for this is 2 to the power of how many “coins” you have, i.e. 23=8.)

Now imagine you’ve got 250 coins. The number of possible states you have would be 2 to the power of 250, which is a very large number, approximately 10 followed by 80 zeroes. That number is actually what physicists estimate to be the total number of particles in the entire known universe.

If you’re following, you may have realized that there is not enough matter in the universe to even write down all of the possible combinations of heads and tails that could exist from 250 coins, let alone store them in one of our classical computers.

Now suppose that instead of coins, you have 250 qubits. This set of qubits can exist in all of these possible combinations of states at the same time, making your quantum computer capable of holding more information than any classical computer that could ever be built.

Here’s the catch: there is a special ingredient in all of this, which is what gives all of these quantum properties their power. The ingredient is a property that two or more particles can share called entanglement.

When two particles are entangled, it means that they react to a change in the other’s state instantaneously, no matter how far apart they are. Two entangled electrons seem to behave as a singular object, where the measurement of a property of one electron– whether it is spin-up or spin-down, for example (spin is a property that all particles have)– instantly affects whether the other electron will measure to be spin-up or down.

Einstein famously referred to this phenomenon as a “spooky action at a distance,” and although we have gained some understanding of it, it still baffles physicists to this day.

Luckily, physicists don’t have to fully understand why entanglement works in order to reap its benefits, and quantum computers can use entanglement to manipulate qubits and force calculations.

The catch is that it is very difficult for researchers to harness the power of entanglement and other properties because of how fragile these quantum systems are, which we’ll talk more about later.

Small-Scale Technology Makes for Large-Scale Impact

According to Geller, the impact that most people see from quantum computers will likely be second-hand. To understand how, think about the supercomputers that tech companies use today.

Supercomputers, which are gigantic and kept in tech companies’ large storage facilities, are used to simulate aircraft design, build more fuel efficient engines, and research treatments for conditions such as heart disease by simulating the inner working of the heart with 3D models, according to the Office of Energy Efficiency and Renewable Energy.

Supercomputers are very large in size, and are often kept in entire buildings dedicated solely to housing supercomputers.

All of these are examples of uses most of us aren’t directly aware of, but that impact our lives nonetheless. According to Geller, we will likely see a similar situation with quantum computers.

“I think it’s in these very indirect ways that [most people] won’t even be necessarily aware of, such as in drug design and material design,” he said. “It’ll be kind of behind the scenes as a special purpose scientific tool.”

Quantum computers, once they can be used at their full capacity, will be able to simulate complex structures that will allow for innovative research in pharmaceutical and material design, for example.

“Suppose you want to design a new drug and you want to simulate the chemical reactions,” Geller said. “That is something you could use a quantum computer for.”

Or, suppose you wanted to design a lightweight material to build an aircraft. You could theoretically design a material that is both very light-weight and very durable from the ground up by simulating its structure using a quantum computer, according to Geller.

Many experts, including Geller, predict that the most profound implications for quantum computing will lie in cybersecurity.

Your browsing, email and banking data are kept secure through a process called encryption. Encryption works by essentially scrambling your information with a public key as it is sent over a server, and providing you with a top secret personal key that only you can use to unscramble it.

The problem is that the public key could theoretically be used to calculate your secret private key and subsequently access your private information, although those calculations would take a regular computer years of trial and error to complete.

A quantum computer, however, could do those calculations very quickly. Quantum computing likely won’t reach this capability for another 10 years or so, and government agencies like the NSA are already switching to protocols that will be resistant to security breaches from quantum computers.

Annahstashia Jebraelli, PhD student at UGA who studies quantum computing under Geller, expressed her excitement about the upcoming field.

“I think it’s gonna completely change the world,” she said. “A lot of people know about the space race and stuff like that, but I feel like the quantum computer race could change the world even more than the space race ever did.”

So if all of these tech companies have already built quantum computers, what’s preventing them from reaching their full capabilities?

Turn Down the Noise!

The quantum computers that exist currently can only solve very specialized problems, and they have a long way to go before they can be used for anything truly transformative.

The central obstacle is that all of the power of a quantum computer comes from entanglement, and it’s very difficult to keep this special property from being disrupted.

“Entanglement is a very fragile property,” Geller said. “It’s very easily ruined due to noise and errors from the environment, errors in operation, there’s all kinds of errors.”

Noise is a term physicists use to describe anything that disrupts a quantum system by interacting with it, causing it to behave classically.

Because the universe is made up of fundamental particles that obey the laws of quantum mechanics, quantum laws govern everything. However, we don’t see this at the larger, classical scale because of how fragile quantum systems are.

“Big things like us are so noisy because we’re at room temperature and have atoms running around,” Geller said. “You never see a person in two places [in the way that] a particle can be in two places at once.”

One way that quantum computers are protected from noise is by keeping them frozen at less than one degree above absolute zero, which is minus 270º Celsius– the lowest temperature that is theoretically possible, where the motion of particles is almost completely halted.

In order to keep them this cold, quantum computers are kept in what are essentially giant freezers.

Beyond keeping them frozen at near absolute zero, a central piece of what researchers are trying to accomplish in the coming years is improving quantum error correction.

The goal of quantum error correction is to protect fragile quantum systems from noise and other errors such as gate errors, measurement errors, and state preparation errors. All of these terms are essentially talking about situations that arise that cause quantum systems to decohere, meaning they are no longer in a superposition of states and lose the trait that makes them so powerful in computing.

Geller previously worked in the fields of quantum error correction and quantum gate design. He provided theoretical support for one of the leading quantum computing programs, which was at the University of California Santa Barbara at the time, and was later acquired by Google.

Researchers at Google have since progressed quantum error correction even further. The quantum computer that Google is currently working on, called Sycamore, has 53 qubits, but having qubits isn’t useful unless they can hold onto their quantum properties.

Big leaps in progress will come when quantum error correction is optimized, not by adding more qubits to the computer.

“That’s why it’s gonna take another 10 or 20 years before you have error correction and you can actually use quantum computers,” Geller said. “It’s not that you just need to add more qubits. The problem is, you need to go from the noisy qubits to error-corrected qubits, that’s the big jump that has to occur.”

According to the deep physics venture fund Quantonation, the total capital invested in quantum computing could reach up to $3 billion by the end of 2021.

While tech companies pour their resources into bringing their versions of quantum computers up to par, independent researchers like Geller are using their abilities to think about what an even more advanced, future version of quantum computers may look like.

Quantum 2.0: The Next Generation

According to Geller, two important aspects of this next generation, which he calls Quantum Computing 2.0, would be making quantum computers smaller and getting them to function at room temperature.

“You can’t put it in a smartphone, a quantum computer chip, but that’s something that people will eventually want to do,” Geller said. “That might be for computing, that might be part of the navigation, it could be something else. There’s lots of reasons to want to make a small quantum computing chip that can be in a smartphone, and for that it would have to be at room temperature.”

He said that this in particular is not something he is working on, but that other independent researchers are.

Geller’s work this year has transitioned from researching quantum error correction to very speculative, theoretical work that may have the potential to influence what is considered possible in quantum computing.

“I started working in this field 10 years ago, when it was a much smaller field,” Geller said. “And you could, as an individual researcher at the University of Georgia, actually make an impact on things.”

Geller said things are much different today, and now that Google has a $10 billion quantum computing program, considering how he can make an impact on the field on his own has driven him to pursue more speculative research.

The theoretical framework Geller is currently exploring centers on what he calls “nonlinear qubits”– a term that will likely result with nothing in an internet search because nonlinear qubits are not technically supposed to exist.

It has been well investigated and well known since the 1920’s, Geller said, that quantum mechanics is an inherently linear science.

“It’s also known with some idealized models, that if you did have nonlinearity in quantum physics, it would give you greatly enhanced computational power,” Geller said.

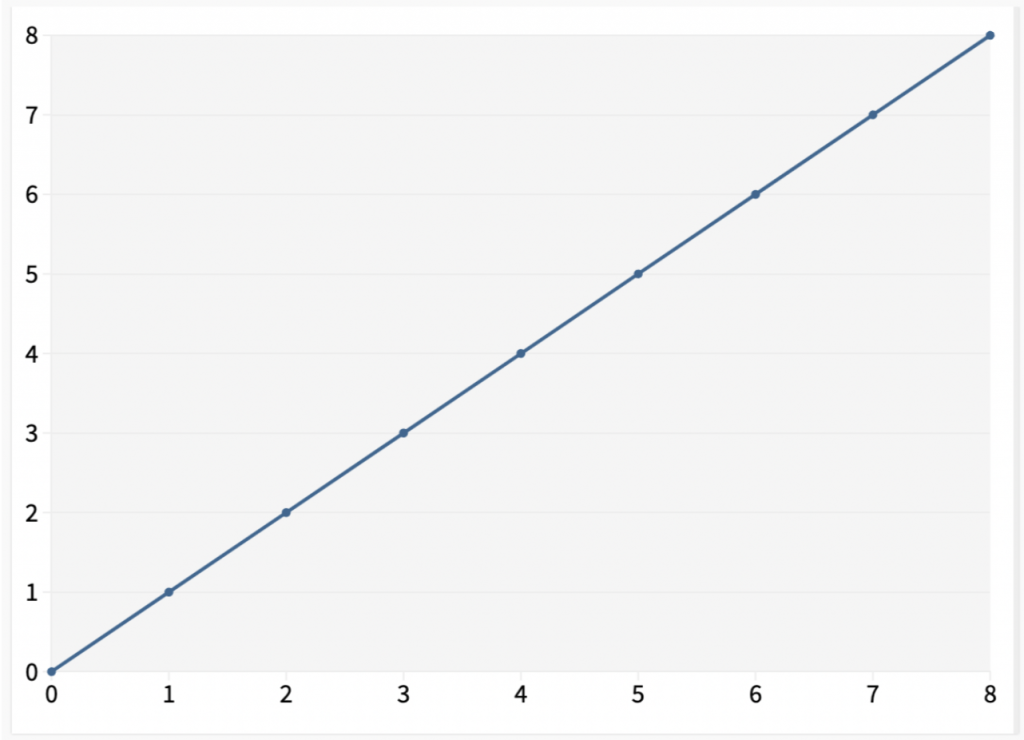

Explaining the difference between linearity and nonlinearity in this sense would involve some math.

For our purposes, the simplest example of linearity is a function represented by the line itself. Graphing an equation where the output (Y) is exactly proportional to the input (X) results in a straight line, hence the term linear. Although many physical systems are described by such linear models, they are only approximate. Most physical systems involve some degree of nonlinearity. Surprisingly, quantum mechanics seems to be exactly linear.

We don’t need a complete understanding of the implications of nonlinearity in quantum mechanics to get the point– that slightly altered rules would change the computational power of the system.

Before the idea of quantum computers had started to take form in the 1990’s, people thought that computation was a “mathematically abstract concept” that was separate from the laws of nature, according to Geller.

It wasn’t until quantum computing was conceptualized that people realized computational ability can stem from the actual hardware being used to perform computations.

Geller said that in 2009, MIT physicists Daniel Abrams and Seth Lloyd got people thinking about these “nonlinear” ideas by putting forth the question, “What if the laws of nature are even different and maybe more exotic than we think they are, how would that affect the power of a computer?”

Abrams and Lloyd’s paper basically said that if quantum mechanics was nonlinear, in a very idealized setting, we would have enormous computational power.

“In fact, it has so much power that in the computer science world, it’s really considered almost a joke to think that it would be possible to have that much computing power,” Geller said. “And that’s also why, you can see, I’m very speculative. I’m coming from a point of view saying ‘I acknowledge that quantum physics is fundamentally linear’, but it is also known that you can, if you design a physical system in an approximate way, have the nonlinearity that you need.”

Geller said he is in very early stages of this work. He is currently trying to understand what kinds of nonlinearity are out there, and what these models may look like.

“It is challenging to even formulate a consistent, fundamentally nonlinear quantum theory in accordance with general principles,” Geller wrote in his new paper, the first he has written on this subject. “In this paper we consider the application of effective quantum nonlinearity to information processing, while at the same time accepting that quantum physics is fundamentally linear. Effective means that it arises in some approximate (e.g., low-energy) quantum description, is a consequence of constraints on a linear system, or emerges in the limit of a large number of particles.”

Geller said that there are a few physicists in the United States that are looking into this line of research, but that there are quite a few researchers in Poland right now doing related work.

Jebraelli, the grad student who is working with Geller, said she is very excited to learn more about these speculative theories.

“It’s something that’s pretty novel and brand new, so I like the fact that it would be, hopefully, doing something that’s really advancing the field further,” she said. “A lot of physics research that’s done is almost applying the same methods just to different systems– which is not to say that it’s easy, because it’s not– but I like that this is something brand new.”

Geller said that if all of this research were to go to plan, in the future he would consider building nonlinear qubits that would attach to a regular quantum computer, which would, theoretically, greatly increase its computational capacity.

For many, the future of quantum computing is as exciting as it is mysterious. Understanding quantum systems and how to manipulate them will help scientists build quantum computers that have the potential to transform a wide range of fields, as well as influence the role technology plays in our lives.

While we can’t be sure exactly when quantum technology will take its next big leaps, we can expect that when it does, it will be a leading factor in propelling us into a new era of technological advancement.

Alaina O’Regan is a student majoring in journalism at the University of Georgia.

Show Comments (0)